DeepSeek - The open source AI model revolution

AI has been buzzing and chatGPT has been one of those killer apps that revolutionised the whole AI ecosystem that even a small kid now knows the terms like Generative AI. Which is good but whatever they did was not open sources(they did published some of their researches though) meaning the models were not open source. Though OpenAI has developed several open-source models across various domains:

Whisper: A multilingual speech recognition system capable of transcribing and translating multiple languages.

CLIP (Contrastive Language–Image Pre-training): A model that connects images and text, enabling tasks like image classification and zero-shot learning.

Jukebox: A neural network that generates music with vocals in various genres and styles.

Point-E: A model designed for generating 3D point clouds from textual prompts.

But their GPT models which are the Foundational models are proprietary.

Enter 2025 - DeepSeek R1

Its making waves on the internet in the past weeks and people are going crazy on how a Chinese company with fraction of cost has come up with a models trained at fraction of a cost with better performance the OpenAI’s most advanced o1 model. They have made it open source, free to use and commercialise too! This is what open source disruption means!

Quick facts

Open source and free to use

Published technical highlights

Performance on par with OpenAI o1

$2.19 / million output tokens vs $60/ million tokens for O1

Watch my detailed video on what it is and how to run it locally using Ollama and Llamaedge.

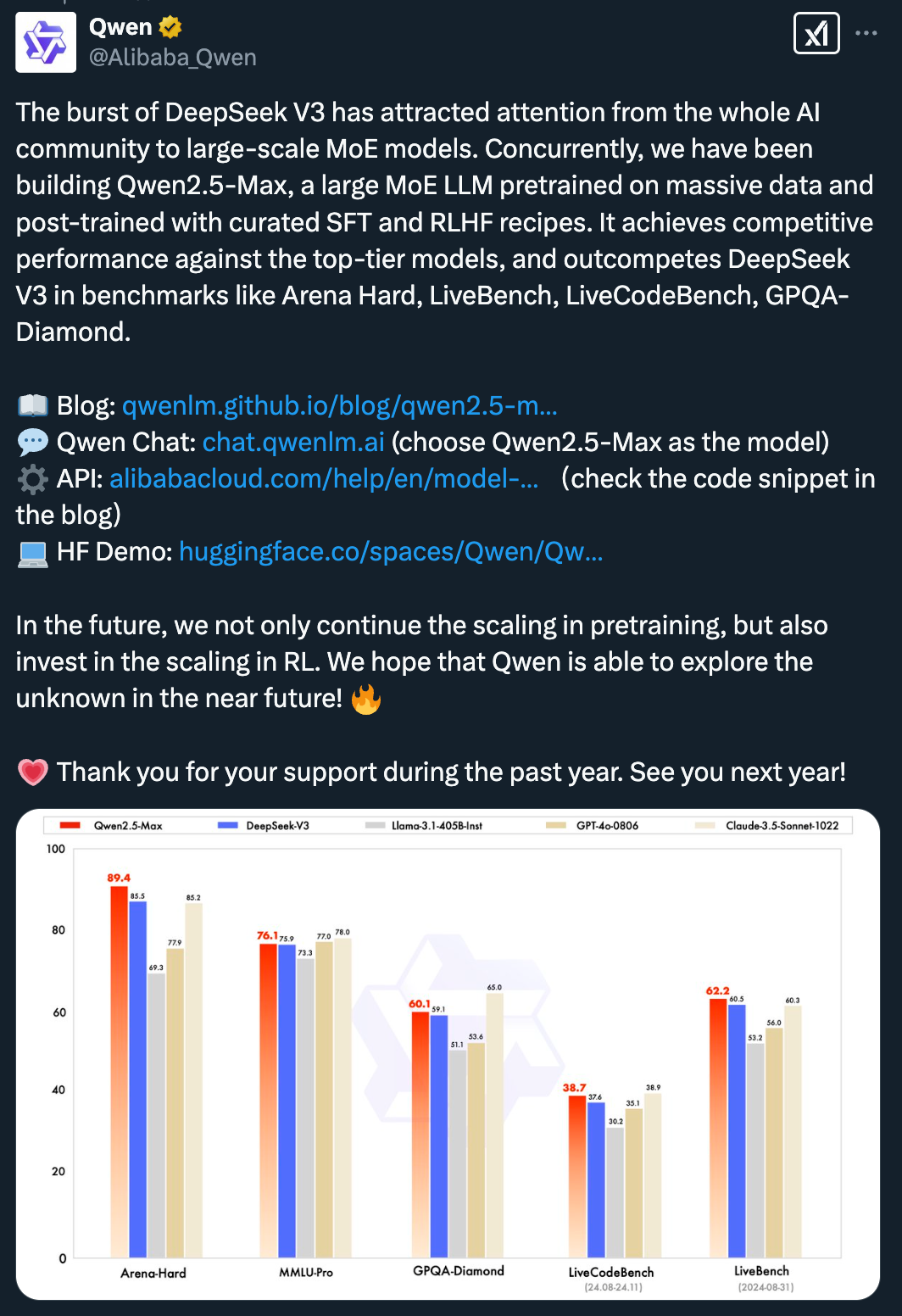

While Deepseek was not the only one, a couple days back Alibaba launched Qwen 2.5 Max an AI model that writes, generates images/videos, and does web search.

A bit more…

Hugging Face is a key player in AI and machine learning, it provides an open-source platform for building, deploying, and sharing ML models. Often referred to as the "GitHub of ML," it enables collaboration through model hosting, dataset sharing, fine-tuning, and deployment. Hugging Face’s Transformers library simplifies ML workflows, and its Spaces feature allows interactive demos. The company, founded in 2016, has partnerships with AWS, Google, and Nvidia. As AI adoption grows, Hugging Face’s open ecosystem fosters innovation, challenging closed-source AI providers like OpenAI and Google.

So you can use Huggingface to run, test, build, train your AI models.

All in all what I want to highlight the most is - the power of open source, coming out of no where Deepseek has proven to be super innovation and all the folks including Sam Altman(CEO OpenAI) mentioned it to be a great model. This has also shook USA, India and other nations to do something and keep innovating, we need to keep pushing each other to innovate more and more and it can only happen with open source!

What are your thoughts on this? do mention in comments.

What I have been doing?

We have published some great content on Kubesimplify English and my Kubestronaut series is going on Kubesimplify Hindi.

Currently I am in Brussels for Fosdem where we have a devPod booth and also we have a talk. So if you are around, do come and say Hi!

I am creating more dope content wrt AI and cloud native so stay tuned and make sure to follow/subscribe everywhere.

Stuff I have been reading

Solving GPU Challenges in CI/CD Pipelines with Dagger - Check out how to simplify GPU integration in CI/CD pipelines, addressing challenges like high costs, underutilization, and infrastructure complexity. By leveraging remote runners like Fly.io and Lambda Labs, Dagger enables on-demand GPU usage, persistent caching, and seamless deployment, making GPU-powered workflows more efficient and cost-effective.

What is observability 2.0? - Observability 2.0 enhances traditional monitoring by unifying telemetry data (metrics, logs, and traces), leveraging AI-driven anomaly detection, and enabling proactive troubleshooting in dynamic, distributed systems. It improves system reliability, reduces downtime, and aligns technical insights with business outcomes, making it essential for modern cloud-native architectures.

Run DeepSeek R1 on your own Devices in 5 mins - Shows how to run Deepseek on any device using Llamadge,

So you wanna write Kubernetes controllers? - Developing Kubernetes controllers seems easy with tools like Kubebuilder, but building production-grade, scalable, and reliable controllers requires deep understanding of API conventions, reconciliation logic, and controller patterns. This article highlights common pitfalls in controller development, such as poorly designed CRDs, misusing the Reconcile() method, not leveraging cached clients properly, and failing to implement best practices like observedGeneration and the expectations pattern, ultimately guiding developers toward writing efficient, idiomatic Kubernetes controllers.

A new era for Helm - Helm, one of the earliest cloud-native tools, has evolved into an industry-standard package manager for Kubernetes, widely used across enterprises and government agencies. In 2025, the Helm maintainers are focusing on growing contributor engagement, streamlining processes, and launching Helm v4, ensuring the project remains sustainable and innovative for the next decade.

Introducing vLLM Inference Provider in Llama Stack - The vLLM inference provider is now integrated into Llama Stack, enabling seamless deployment of high-performance AI inference models through collaboration between Red Hat AI Engineering and Meta’s Llama Stack team. This integration allows users to run inference workloads locally or on Kubernetes, leveraging vLLM’s OpenAI-compatible API for efficient and scalable AI model serving.

Awesome Repo’s/Learning resources

Learn from X

https://x.com/I_saloni92/status/1884175131347673213

https://x.com/ProfTomYeh/status/1884663076709879816

https://x.com/levie/status/1884053695626875123

Deepseek censor information on Tiananmen Square, Taiwan, Arunchal Pradesh etc. basically they are puppet of CCP. How can you trust information from puppet