Being born as a mortal human being is not less than a gift, waking up everyday is not less than a gift. We all get one life and limited amount to live out of which on average 33% goes into sleeping and 33% goes into initial education and job. Very limited time remains to actually create an impact.

Since the time we are born, we are given different tasks and milestones to complete and even toddlers are competing - walking milestone, speaking milestone and so on.

When as students are put in a specific curriculum and given different options to be a Doctor, Engineer, Astronaut, sportsperson etc. But what are we chasing?

Our job, future and the work we will be doing for the rest of our lives should be impactful in a positive way. What does this statement even mean? Whatever is being done should have a bigger goal, humanity is a thing that leaves a lot of impact. Chasing for money is fine but I think chasing to help solve complex problems to make this world a better place.

There was a War like situation between India and Pak over terrorism and it was the first time that we experienced blackouts, sirens, drone attacks but a huge thanks to our soldiers for fighting for our safety day in day out.

AI is taking the world by a storm and keeping up with AI is like being Glued to X everyday. Literally everyday, there is something new in this space. Anthropic, OpenAI Codex, Microsoft with their open source AI editor and Google I/O being all about dope AI announcements introduced Gemini Ultra, combining Veo 3 and Flow to give users advanced AI video and creative capabilities, alongside expanded storage and premium services. AI Mode in Search is set to transform how users interact with Google, offering enhanced search queries and shopping features for a smarter, more personalized experience. Project Astra, an ambitious AI assistant for smart glasses, while Android XR lays the foundation for AR, MR, and VR ecosystems, with dedicated headsets expected next year. . Altogether, these launches show how AI is just going too fast!!

What have I been doing?

Creating Kuberenetes course - Expect it in 2 weeks

Creating AI content - I have been creating and exploring Ai content and creating some content around that too. Also Doing very interesting podcast, soon a podcast on open source humanoids dropping next week.

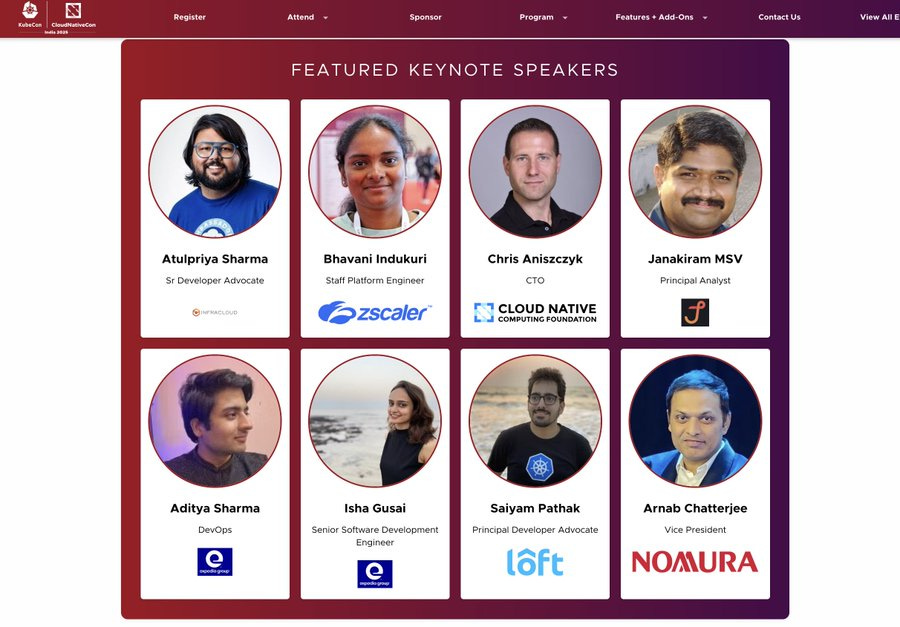

I will be giving a Keynote at KubeCon India - See you there!

LLM in person workshop is coming to Chandigarh on 18th June so make sure to signup today!

Lets move to awesome news and reads, do subscribe to this newsletter before movign forward.

Awesome Reads/Announcements

Claude 4 - Claude launched Claude Opus 4 and Claude Sonnet 4, Claude Opus 4 is the world’s best coding model, with sustained performance on complex, long-running tasks and agent workflows. Claude Sonnet 4 is a significant upgrade to Claude Sonnet 3.7, delivering superior coding and reasoning while responding more precisely to your instructions.

Announcing Flux 2.6 GA - Flux v2.6 marks the General Availability (GA) of the Flux Open Container Initiative (OCI) Artifacts features. The OCI artifacts support was first introduced in 2022, and since then we’ve been evolving Flux towards a Gitless GitOps model. In this model, the Flux controllers are fully decoupled from Git, relying solely on container registries as the source of truth for the desired state of Kubernetes clusters.

Kubernetes v1.33: Key Features, Updates, and What You Need to Know In this post I explained about Kubernetes 1.33 features and also gave demo’s for a couple of features on vCluster. What is your Kubernetes 1.33 fav feature?

Implementing Karpenter In EKS (From Start To Finish) - In this blog post, you'll learn step-by-step how to get Karpenter up and running on EKS from the IAM role to the permissions and the deployment of Karpenter itself.

llm-d - Distributed Inference Serving on Kubernetes - This blog introduces llm-d, an open-source project for distributed LLM inference on Kubernetes, explaining its architecture, intelligent routing with kgateway, and how it improves performance and cost-efficiency. It highlights why traditional systems fall short and how llm-d solves key GPU and scaling challenges.

Amazon EKS MCP Server - Amazon EKS MCP server integrates with AI code assistants to manage Kubernetes clusters, automate deployments, and simplify troubleshooting using natural language. It highlights key features like automated cluster creation, resource lifecycle management, and intelligent debugging to enhance the entire app development workflow.

Introducing AI on EKS: powering scalable AI workloads with Amazon EKS - a new open source initiative from Amazon Web Services (AWS) designed to help customers deploy, scale, and optimize artificial intelligence/machine learning (AI/ML) workloads on Amazon Elastic Kubernetes Service (Amazon EKS). AI on EKS provides deployment-ready blueprints facilitating training, fine-tuning, and inference of multiple large language models (LLMs), infrastructure as code (IaC) templates to enable reference architectures as well as customizable platforms, benchmarks comparing different training and deployment strategies, and best practices for tasks such as model training, inference, fine-tuning, multi-model serving, and more.

Grafana v12.0 - Grafana’s major updates in version 12.0, includes new dynamic dashboards, GitHub sync, improved Drilldown tools for metrics, logs, and traces, enhanced alerting, and a smoother migration to Grafana Cloud. It also introduces experimental themes, faster visualizations, and breaking changes like the removal of Angular plugins and stricter API and plugin checks.

Introducing NLWeb: Bringing conversational interfaces directly to the web - Microsoft is introduced NLWeb, an open project designed to simplify the creation of natural language interfaces for websites—making it easy to turn any site into an AI-powered app. Learn more about the technology and how web publishers can get started below.

Awesome Repo’s/Resources

AI-Red-Teaming-Playground-Labs - AI Red Teaming playground labs to run AI Red Teaming trainings including infrastructure.

llm-d - llm-d is a Kubernetes-native high-performance distributed LLM inference framework

Thank you for putting all these together.